Human Visual System: A Quick Introduction (Part 3: Interpretation)

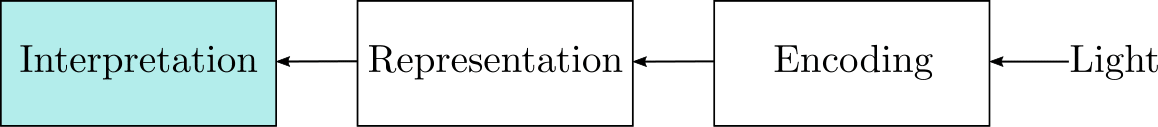

In the previous blogs, we discussed how light is encoded and represented by our visual pathways to generate a “neural image”. In this blog, we will learn about the final stage: interpretation.

Interpretation

Once the visual system has built its neural representation, the next step is to use the limited information available to infer/interpret the properties of real-world objects, such as their color, shape, motion, and depth. The secondary visual cortex and visual association cortex are the parts of the brain believed to be responsible for this inference process. The exact algorithms behind these inferences are still largely unknown and an active area of study. Below are some leading theories on color and motion perception as an example.

Color

Object color is a psychological construct. We see colors by comparing the relative absorption rates of L, M, and S cones in response to light reflected from the object. The reflected light L(λ) depends on both the illumination spectrum (I(λ)) and object’s surface reflectance (R(λ)):

L(λ) = I(λ) R(λ)

Hence, color perception is a process of estimating R(λ). However, this problem is underdetermined as infinitely many combinations of I(λ) and R(λ) can generate the same L(λ). Furthermore, the spectrum L(λ) is subsequently sampled with only three types of cones, each with a narrow wavelength response. This adds to the problem because different L(λ) can result in the same cone responses (also called metamerism). Fortunately, not all solutions to this inference problem are equally likely, and hence our visual system can develop priors about this probability distribution to constrain the solution set. For instance, the spectrum of daylight, the most common light source in most of the earth’s history, does not change widely and unpredictably with time and location. This makes it possible to draw accurate inferences about surface reflectance using low-dimensional linear models.

Motion

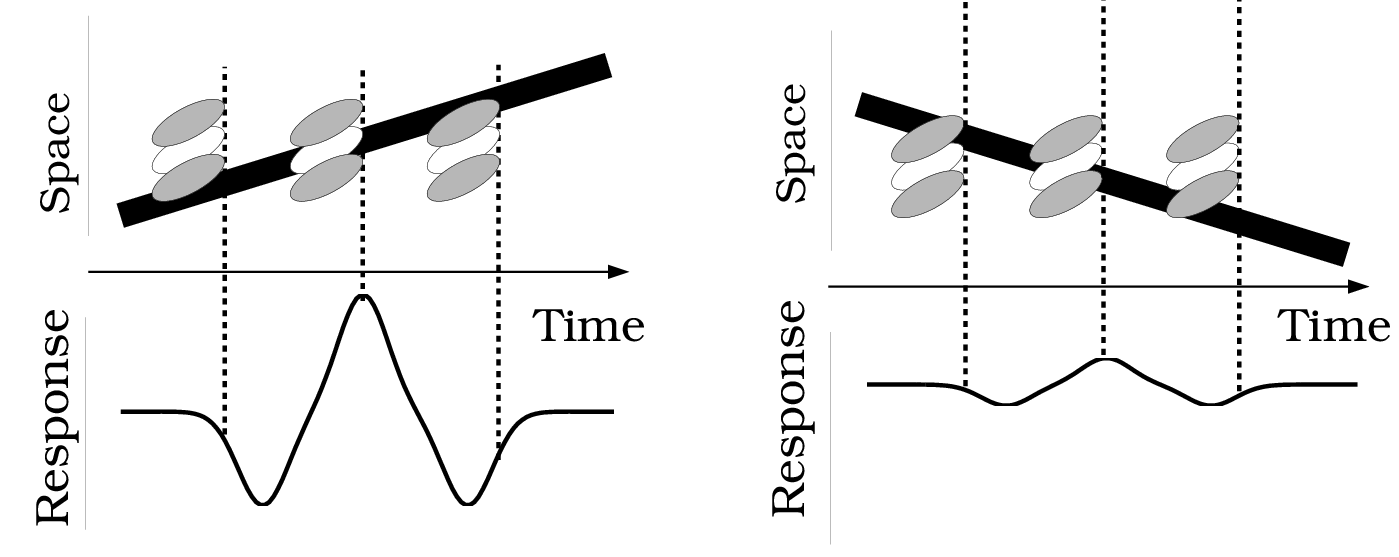

Just like color, motion perception is also an inference problem. Our visual system needs to estimate the relative velocity of an object w.r.t. its surroundings from the two time-varying retinal images. Neurons in the cortical areas with oriented spacetime receptive fields are shown to be particularly useful for this task. The figure below demonstrates this idea where space-time-oriented receptive fields respond differently to the velocity and direction of a moving edge. A center-surround receptive field is represented using three ellipses where the light area represents the excitatory region, and the dark area represents the inhibitory region. In the first case, the moving line and the receptive field share a common orientation generating a large response. In the second case, they are not well-aligned, leading to a reduced response. An array of such neurons can be used to infer the motion field of a dynamic image, in fact, the same ideas form the founding principles of early optical flow methods.

The motion in the retinal image is not just generated by object movement; it can also arise from eye movements. Once the interpretation is complete, our brain sends feedback signals to move our eye (or head). The type of eye movements can be broadly divided into the following categories:

Saccades are rapid, ballistic eye movements used to change the point of fixation. They can reach velocities of up to 900 deg/s. Our sensitivity to low-frequency light-dark contrast patterns is strongly reduced during saccades due to neural factors called saccadic suppression.

Smooth pursuit eye movements (SPEM) are much slower voluntary eye movements (0.15 deg/s to 80 deg/s) designed to track moving stimulus and keep it stabilized on the fovea. Inconsistencies between SPEM and the motion of the tracked object can create unstable retinal images and lead to a blurry image. The inconsistencies are higher for unpredictable (non-smooth) motion paths and higher velocities.

Vestibulo-ocular movements compensate for head movements by stabilizing our eyes w.r.t. external world.

Vergence movements adjust the rotation of each eye to align their fovea with stimuli located at different depths. Unlike other eye movements, vergence induces motion in the opposite direction in both eyes.

The visual system combines the information about eye movements with the estimated motion field from the retinal images to improve its prediction accuracy. While the HVS works well in perceiving real motion, we can also perceive motion even when none occurs. One important example of such illusory motion is apparent motion: a sensation of continuous motion when presented with a sequence of still images. All modern display systems use apparent motion to recreate a sense of motion while displaying videos and animation. But to create an illusion of smooth motion, we need to ensure that the temporal sampling rate of these displays exceeds the HVS detection capabilities or else the observers may end up seeing objectionable motion artifacts.

Color and motion perception discussed above are just two examples of many object characteristics our visual system aims to infer from the neural image: shape, size, depth, shadows, occlusions, and textures. All these separate inferences are integrated into a single holistic explanation for the perceived scene. Developing a computational theory for such integration mechanisms continues to be an active challenge in vision science.