Annoying Motion Artifacts and where we find them

Ever faced annoying artifacts like screen tearing, blurring, or double images while playing video games and wondered where they come from? These artifacts may arise in real-time rendering due to various software and hardware limitations. Let’s investigate some examples of these artifacts, where and when they occur, and how we can mitigate them. All the video simulations of the artifacts below are designed to be viewed on a 60 Hz display.

When a video game runs at a frame rate lower than the display's refresh rate, it can lead to screen tearing or stuttering artifacts. A typical graphics-display pipeline consists of two raster buffers: a front buffer that is shown on the display and a back buffer that the GPU draws into. When the GPU finishes drawing, the two buffers are swapped. Pixels are copied row by row (or column by column) from the front buffer to the display. If the swap occurs before the display scan is complete, it results in an image on the display made up of more than one frame, causing a tearing artifact.

It is possible to synchronize buffer swaps with the display scan (called V-Sync); however, this may pause the GPU until the scan is complete, resulting in decreased performance and input lag. Additionally, if the GPU frame rate is even slightly less than the display’s refresh rate, V-Sync will end up repeating frames, leading to stuttering or judder artifacts. Modern adaptive-sync displays such as G-Sync (NVIDIA) and Free-Sync (AMD) eliminate these artifacts by adjusting their refresh rate to match the rate of frame generation. They can achieve an arbitrary refresh rate (within display capabilities), helping to save power for static scenes and reduce lag.

Simulated tearing artifacts (watch in full-screen 60Hz display)

Simulated V-sync artifacts (watch in full-screen 60Hz display)

When a frame changes, each liquid crystal in an LCD panel needs to transition from one color to another. This change in state is not instantaneous and may not be achieved within the time allocated for a frame switch. This lag in transition can manifest as trailing artifacts. This problem can be partially alleviated by applying a higher voltage (over-driving) to help the pixel reach the desired state faster; however, this can sometimes lead to coronas (glowing edges).

Simulated coronas artifacts (watch in full-screen 60Hz display)

Blur is another common artifact that can usually be attributed to LCD displays holding an image for the full duration of a frame. When our gaze follows a moving object, it continuously moves over pixels that remain stationary throughout the frame. This leads to the image getting smeared on our retina, resulting in the perception of blur. The amount of blur increases with object velocity and decreases with refresh rate (higher refresh rate = lower frame time). Motion blur is quite common in VR headsets, where head movement can lead to high velocities and result in simulation sickness. Blur Blusters has a good interactive demo illustrating this phenomenon.

Simulated hold-type blur artifacts (watch in full-screen 60Hz display)

One common strategy to reduce blur is to decrease the amount of time a signal is displayed each frame (also known as persistence). Examples of this technology include NVIDIA's ULMB and BenQ’s DyAc. However, reducing frame persistence can lower the peak brightness of the display and may introduce flickering artifacts in cases where our visual system cannot fully fuse consecutive frames. On low persistence displays, such as HMDs or projectors with butterfly shutters, ghosting or false edges can be perceived if the image of a moving object is displayed multiple times in the same location.

Simulated flicker artifacts (watch in full-screen 60Hz display)

All displays present a series of static frames to create the illusion of motion. When the number of frames presented per second is not high enough, this illusion breaks, leading to judder or a non-continuous perception of motion.

Simulated judder artifacts (watch in full-screen 60Hz display)

One of the most common artifacts in video games is shimmering, jagged edges, or moiré patterns. The visibility of these artifacts depends on the displayed content. When the display’s spatial and temporal sampling frequency (i.e., resolution and refresh rate) is lower than that of the displayed signal, it results in the creation of signal replicas (also known as aliases) in the frequency domain, which are perceived as these artifacts.

Simulated aliasing artifacts (watch in full-screen 60Hz display)

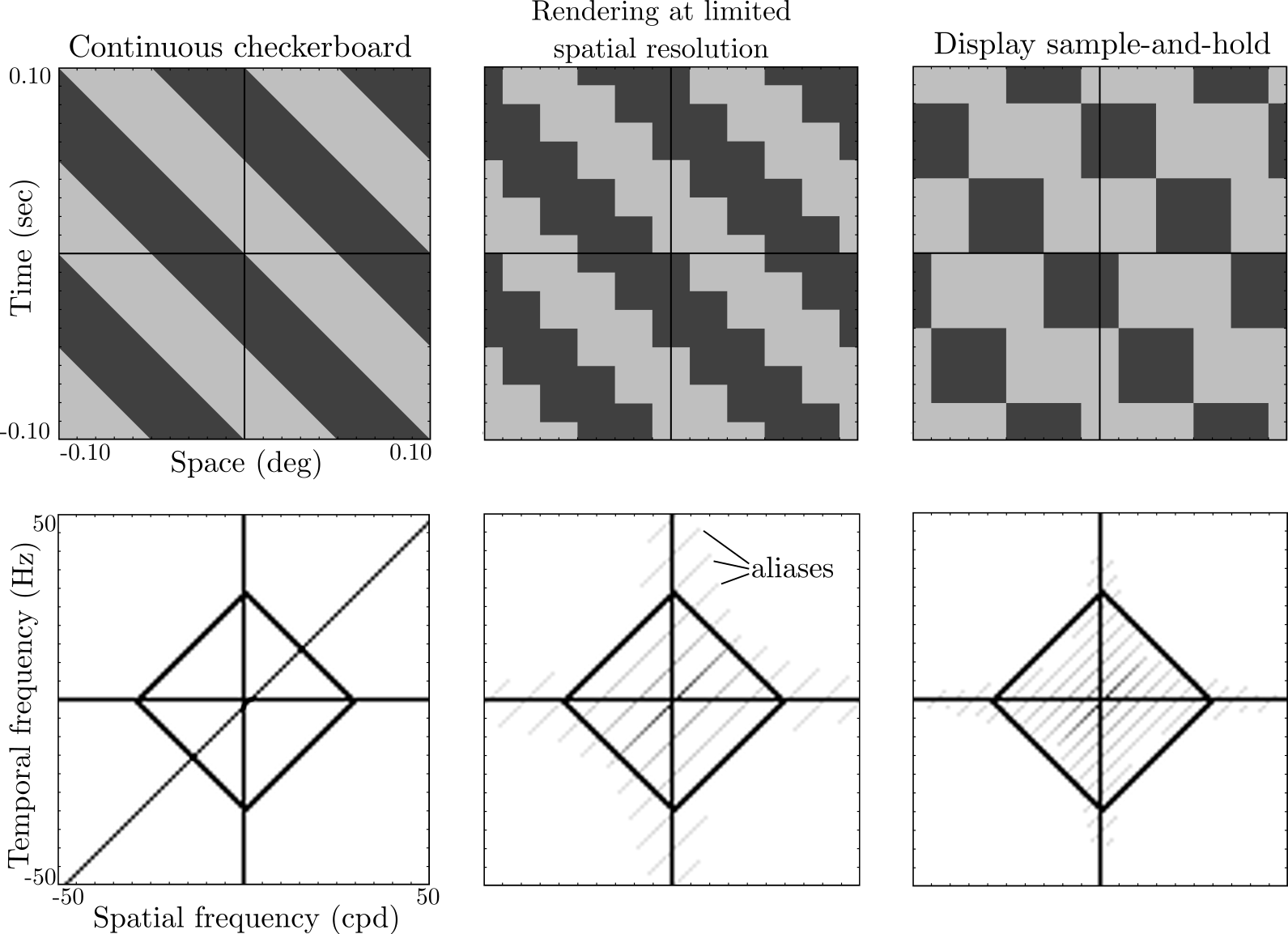

While the visibility of some artifacts can be suppressed by modifying image content or making hardware adjustments, achieving artifact-free motion rendering is often an impossible task due to current display limitations. This usually involves trade-offs between different artifacts. One way to predict the visibility of these artifacts is through window of visibility analysis. The figure provides an example of how displaying a continuously moving 1D checkerboard on a display can give rise to aliases in the frequency domain due to the limited spatial and temporal sampling rates of the display. The top row represents the spatio-temporal domain, while the bottom row shows the corresponding frequency spectrum. The diamond shape represents the spatial and temporal limits of human vision, known as the window of visibility. Both rendering with a limited spatial resolution buffer and displaying the image on a screen with a finite refresh rate lead to the creation of aliases in the frequency domain. If the aliases lie within the window of visibility, they can result in visible artifacts such as blur, judder, and flicker.